Saurabh Garg – Navigating Cloud Expenses in Data & AI: Strategies for Scientists and Engineers

www.pydata.org

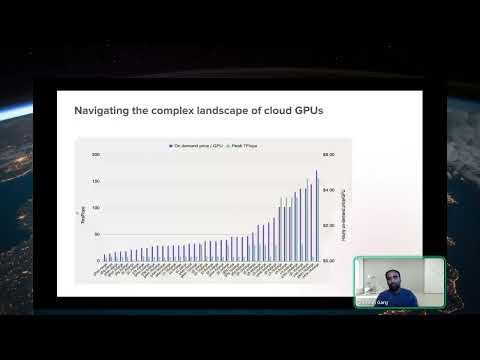

Data rules the world and data-scientists / MLEs across academia and industry are creating new and innovative ways to glean insights which have changed our lives through easy to understand and intuitive interfaces. At the heart of the AI / ML revolution ( genAI, LLMs, bioinformatics, climate science etc ) is the availability and elasticity of state of the art hardware which enables processing large swaths of data ( TBs ) that could not run on local laptops for want of compute/memory. Cloud providers have commoditized these powerful machines to the extent that they are now available to every person with a few clicks.

Cloud computing allows us to tradeoff upfront hardware costs for granular operational expenses such as renting GPUs by the second. Prima facie this might seem like a winning formula, a key downside is that these costs often add up uncontrollably. Attributing the usage of such hardware to Data/AI/ML jobs across dimensions like cloud accounts, instances, workloads down to the lowest level of granularity, can help provide transparency to not only cost albeit resource management as well.

Through our work with open-source Metaflow, which started at Netflix in 2017, we have had an opportunity to help customers place their cloud spend in the context of value produced by individual projects combined with more granular resource management to limit spend.

In this talk, we will provide an overview of the lessons we have learnt in our quest to get a better handle on costs by using Metaflow. We will share best practices to consider when writing AI/ML workloads and how constructs in the Metaflow framework can be used to answer questions Data-Scientists/MLE’s ask themselves such as:

How do my cloud costs break down over time and what workloads/cloud instances are driving these costs?

Are the workloads executing tuned to allow maximum usage of these expensive resources?

How can I refactor my workloads such that the expensive resources are used to their optimal capacity?

In particular, we’ll focus on best practices to follow when working with large datasets in a distributed multi cloud / cluster environments, and how Metaflow constructs can help achieve that in a human friendly manner, with very few lines of code.

The audience will be empowered to build and deploy production-grade Data/AI/ML pipelines while learning strategies on how to optimize workloads to keep expensive ML/AI operations under control. Finally, the audience will have the tools to answer questions like “Am I using my resources to their fullest extent? If not, what are the opportunities for tuning my AI/ML jobs resource requirements, to bin pack hardware and subsequently reduces overall costs”

PyData is an educational program of NumFOCUS, a 501(c)3 non-profit organization in the United States. PyData provides a forum for the international community of users and developers of data analysis tools to share ideas and learn from each other. The global PyData network promotes discussion of best practices, new approaches, and emerging technologies for data management, processing, analytics, and visualization. PyData communities approach data science using many languages, including (but not limited to) Python, Julia, and R.

PyData conferences aim to be accessible and community-driven, with novice to advanced level presentations. PyData tutorials and talks bring attendees the latest project features along with cutting-edge use cases.

00:00 Welcome!

00:10 Help us add time stamps or captions to this video! See the description for details.

Want to help add timestamps to our YouTube videos to help with discoverability? Find out more here: https://github.com/numfocus/YouTubeVideoTimestamps